记录k8s jenkins docker git mvn kubectl 构建项目的问题

环境

k8s 1.18

jenkins 以pod部署

harbor 单独部署

ranche 单独部署

pipleline 方式构建

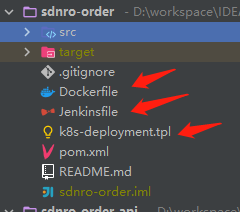

项目目录结构

一、jenkins以pod部署 docker 命令问题

挂载目录

volumeMounts: - mountPath: /var/jenkins_home name: jenkins-data - mountPath: /run/docker.sock //docker的sock name: docker - mountPath: /usr/bin/docker //docker的命令 name: docker-home - mountPath: /etc/docker/daemon.json //docker的harbor配置 name: daemon subPath: daemon.json volumes: - name: jenkins-data persistentVolumeClaim: claimName: jenkins-pv-claim - hostPath: path: /run/docker.sock type: '' name: docker - hostPath: path: /usr/bin/docker type: '' name: docker-home - hostPath: path: /etc/docker/ type: ''

docker命令权限问题:

Got permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Post http://%2Fvar%2Frun%2Fdocker.sock/v1.40/auth: dial unix /var/run/docker.sock: connect: permission denied

永久方法 pod所在宿主机 chmod a+rw /var/run/docker.sock 授权

stage('Docker Build') {

when {

allOf {

expression { env.GIT_TAG != null }

}

}

agent any

steps {

unstash 'app'

sh "docker login -u ${HARBOR_CREDS_USR} -p ${HARBOR_CREDS_PSW} ${params.HARBOR_HOST}"

sh '''cd /var/jenkins_home/workspace/${APP_NAME}&&

docker build --build-arg JAR_FILE=`ls target/*.jar |cut -d '/' -f2 | grep -v sources.jar` -t ${HARBOR_HOST}/${DOCKER_IMAGE}:${GIT_TAG} .'''

sh "docker push ${HARBOR_HOST}/${DOCKER_IMAGE}:${GIT_TAG}"

sh "docker rmi ${HARBOR_HOST}/${DOCKER_IMAGE}:${GIT_TAG}"

}

}二、git配置ssh连接

ssh-keygen -t rsa -b 4096 -C "your_email@example.com"

1.自定义的gitea域名 域名无法解析问题

kubectl edit configmap -n kube-system coredns

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

hosts { //这里添加自定义域名解析

192.168.0.51 gitea.zkldragon.org

192.168.0.81 apiserver.zkl

fallthrough

}

}2. 使用时可能会出现权限过大问题

stderr: Load key "/var/jenkins_home/caches/git-176be9ffd92b88531be55ea35a73a529@tmp/jenkins-gitclient-ssh12692249424673379337.key": invalid format @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ WARNING: UNPROTECTED PRIVATE KEY FILE! @ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ Permissions 0660 for '/var/jenkins_home/.ssh/id_rsa' are too open.

权限变更为600即可 chmod 0600 /etc/ssh/ssh_host_rsa_key chmod 0600 /etc/ssh/ssh_host_ecdsa_key chmod 0600 /etc/ssh/ssh_host_ed25519_key

三、mvn构建项目指定settings.xml问题 私服

如下 因为是在pipleline中执行mvn命令,需要使用私服时要指定到自己的setting配置上

添加

--settings /var/jenkins_home/apache-maven-3.8.5/conf/setting.xml

即可

/var/jenkins_home是绑定到宿主机的文件上的这里重建jenkins容器也不会丢失数据

stage('Maven Build') {

when { expression { env.GIT_TAG != null } }

steps {

sh '/var/jenkins_home/apache-maven-3.8.5/bin/mvn clean package --settings /var/jenkins_home/apache-maven-3.8.5/conf/setting.xml -Dfile.encoding=UTF-8 -DskipTests=true'

stash includes: 'target/*.jar', name: 'app'

}

}四、使用piplelin的eagent的docker的helm镜像部署项目 kubectl

stage('Deploy') {

when {

allOf {

expression { env.GIT_TAG != null }

}

}

agent {

docker {

image 'lwolf/helm-kubectl-docker'

args '-u root:root --add-host apiserver.zkl:192.168.0.81' //解决权限问题

}

}

steps {

sh "echo '192.168.0.81 apiserver.zkl' >> /etc/hosts" //解决域名解析问题

sh "mkdir -p ~/.kube"

sh "echo ${K8S_CONFIG} | base64 -d > ~/.kube/config"

sh "sed -e 's#{IMAGE_URL}#${HARBOR_HOST}/${DOCKER_IMAGE}#g;s#{IMAGE_TAG}#${GIT_TAG}#g;s#{APP_NAME}#${APP_NAME}#g;s#{K8S_NAMESPACE}#${K8S_NAMESPACE}#g;s#{SPRING_PROFILE}#k8s-test#g' k8s-deployment.tpl > ${K8S_APP_YAML}"

sh "kubectl apply -f ${K8S_APP_YAML} --namespace=${K8S_NAMESPACE}"

}

}1.权限问题

+ mkdir -p //.kube mkdir: can't create directory '//.kube': Permission denied

args 添加 '-u root:root'

2.kubectl 命令查找apiserver.zkl域名无法解析问题

+ kubectl apply -f sdnro-order-k8s.yaml '--namespace=sdnro' Unable to connect to the server: dial tcp: lookup apiserver.zkl on 192.168.0.1:53: no such host

这里开始使用 --add-host apiserver.zkl:192.168.0.81 貌似没有作用

所以如下

sh "echo '192.168.0.81 apiserver.zkl' >> /etc/hosts" 使用sh命令直接写入hosts文件

五、使用docker运行jar镜像时,报错:“docker no main manifest attribute”

pom.xml添加配置

<build> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> <version>2.2.6.RELEASE</version> <executions> <execution> <goals> <goal>repackage</goal> </goals> </execution> </executions> </plugin> </build>

这里有一些知识点

查看docker运行的jar日志

docker logs -f c40412900f7b (containerId)

查看pod信息

kubectl describe pod sdnro-order-deployment-598785d96-wf79p -n sdnro

Name: sdnro-order-deployment-598785d96-wf79p Namespace: sdnro Priority: 0 Node: k8snode2/192.168.0.83 Start Time: Fri, 24 Mar 2023 14:30:17 +0800 Labels: app=sdnro-order pod-template-hash=598785d96 Annotations: cni.projectcalico.org/podIP: 10.100.185.228/32 cni.projectcalico.org/podIPs: 10.100.185.228/32 Status: Running IP: 10.100.185.228 IPs: IP: 10.100.185.228 Controlled By: ReplicaSet/sdnro-order-deployment-598785d96 Containers: sdnro-order: Container ID: docker://9261531198a4cc6f633a2fc75c9bc234a97368203e05458de6e31f9647c4f81b Image: 192.168.0.92/sdnro/sdnro-order:bd14322 Image ID: docker-pullable://192.168.0.92/sdnro/sdnro-order@sha256:97853a33ad40368f9d22fc0bfc8482a409aff3065916f0f9910d63d62996a705 Port: 8083/TCP Host Port: 0/TCP State: Waiting Reason: CrashLoopBackOff Last State: Terminated Reason: Error Exit Code: 1 Started: Fri, 24 Mar 2023 14:35:57 +0800 Finished: Fri, 24 Mar 2023 14:36:28 +0800 Ready: False Restart Count: 5 Environment: SPRING_PROFILES_ACTIVE: k8s-test Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-gbzvv (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: default-token-gbzvv: Type: Secret (a volume populated by a Secret) SecretName: default-token-gbzvv Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 7m55s default-scheduler Successfully assigned sdnro/sdnro-order-deployment-598785d96-wf79p to k8snode2 Normal Pulling 7m54s kubelet, k8snode2 Pulling image "192.168.0.92/sdnro/sdnro-order:bd14322" Normal Pulled 7m49s kubelet, k8snode2 Successfully pulled image "192.168.0.92/sdnro/sdnro-order:bd14322" Normal Created 4m15s (x5 over 7m48s) kubelet, k8snode2 Created container sdnro-order Normal Started 4m15s (x5 over 7m48s) kubelet, k8snode2 Started container sdnro-order Normal Pulled 4m15s (x4 over 7m15s) kubelet, k8snode2 Container image "192.168.0.92/sdnro/sdnro-order:bd14322" already present on machine Warning BackOff 2m53s (x12 over 6m44s) kubelet, k8snode2 Back-off restarting failed container

使用docker进入镜像查看文件 -- 可以查看自己打包的jar到底是啥样的

docker run -it --entrypoint sh d0e5d51a31e0 (image id)

查看容器绑定目录

docker inspect d03b985a513e|grep Mounts -A 20

查看pod日志

kubectl logs sdnro-order-deployment-67bdb5dbf-lf4p4 -n sdnro

进入pod

kubectl exec -it sdnro-order-deployment-67bdb5dbf-wfpkw -n sdnro -- sh

docker进入容器

[root@k8snode1 docker]# docker ps -a | grep jenki d03b985a513e d5ed2ceef0ec "/usr/bin/tini -- /u…" [root@k8snode1 docker]# docker exec -it d03b985a513e bash jenkins@jenkins-5f7c4bc78-pl4ms:/$

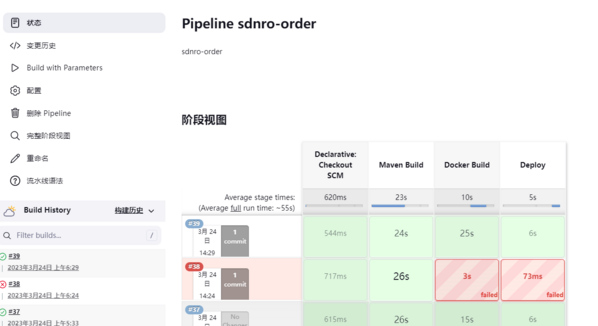

最终结果:

jenkins结果

harbor结果

nacos结果

乐享:知识积累,快乐无限。